(This column originally appeared in Forbes)

If you’re wondering why – all of a sudden – artificial intelligence has exploded into your life you’re not alone. Many of my clients and friends have been asking a similar question ever since late last year when OpenAI released their ChatGPT product and everyone went wow!

With such an unprecedented show of how generative AI can conduct very human-like conversations and provide (mostly) accurate answers, every other tech company from Microsoft to Google to Salesforce suddenly jumped into the fray with their products. And from there, countless apps, startups and entrepreneurs have been flooding the market with their products in the hopes of becoming the next big thing.

But why? AI’s been around for a while. But it’s only in the past year or so that’s its received so much public attention. What happened? The answer is: hardware. And like most things, it’s not just one part of hardware. It’s actually three hardware things that have come together to form a perfect storm that created this new technology wave. What three things?

The first is about storage.

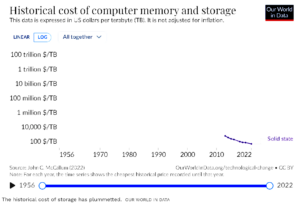

Just take a look at the chart above from Our World in Data. In 1990, the cost of a terabyte of data was a whopping $7.4 million. What does it cost now? $14.30. And no, I’m not misplacing a decimal. Are you, like me, old enough to remember those 1.4 MB floppy disks? Thanks to the plummeting cost of materials and economies of scale, a megabyte in data, which cost $9,200 in 1956 (that’s about $85,000 in today’s dollars) now costs just $0.00002. I’m an accountant and I don’t even know how to read that number but it’s clear that storage is definitely dirt cheap.

AI is driven by Large Language Models and LLMs are simply huge databases that are being trained to predict responses and come up with correct answers. AI doesn’t work without an enormous data set to query. ChatGPT’s knowledge comes from pretty much the entire Internet since the beginning of the Internet. The company has scraped countless terabytes of data from the Internet to be captured into its LLM and scanned as much data from other sources. Then, for years, it trained its algorithms on this dataset. But none of this would have happened if each terabyte cost $7.4 million. Thanks to storage becoming so cheap, the LLMs can store almost limitless amounts of information for artificial intelligence to become…well…more intelligent.

So that’s the first big hardware reason why AI has exploded. The next reason is processing.

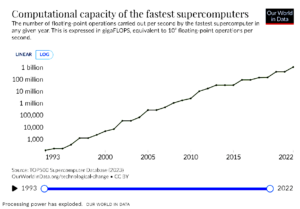

According to The Economist the price of computation today is roughly one hundred-millionth what it was in the 1970s, when the first microprocessors became commercially available, which is another number I can’t really comprehend. You don’t really need to know what a Gigaflop is, other than it’s a measure of floating point operations per second that a processor can perform. Suffice it to say in 1993 this number was in the hundreds. Now it’s at a billion…per second. The takeaway is that, thanks to the same economies of scale and Moore’s Law, our processors today are literally hundreds of millions of times faster than they were less than 30 years ago.

Besides data, AI needs lots of processing. Take a self-driving car, for example. Elon Musk told podcaster Lex Fridman back in 2021 that making his Tesla vehicles fully self-driving turned out to be “a lot harder” than he thought even with all of these incredible advances in processing speed and storage size. That’s because the AI behind a self-driving car has to process an indeterminate amount of data from video, audio and other inputs and then make a decision within a split second, elsewise a puppy could be run over by a Tesla…or even worse (if there is such a worse thing).

Ultimately, Musk and others will succeed with self-driving vehicles but it’s still going to take some time. However, we can today avail ourselves of the fast processing times being leveraged by generative AI tools that give us quick answers to our questions because this takes less processing than a self-driving car, or a robot or other devices that need to instantaneously make decisions like a human.

So there’s cheap storage and fast processing and then one other hardware thing that’s needed to really make AI work: the cloud.

No, not this cloud. I mean cloud computing. All those millions and millions of servers processing information from all over the world and then delivering output back to our phones, cars, computers, tablets and other devices making the request. Storing all that data on a device isn’t affordable, nor are the processing chips needed to run those queries. The reality is that you can’t have the entire internet stored on your phone right now.

But maintaining this information on all those computers in the cloud means we can leverage all of that storage and processing and then get our answers from multiple places immediately. The cloud market has grown from about $145 billion in 2017 to about $615 billion and is projected to grow to $849 billion by 2026. AI is fueling this, thanks to its hunger for more and more data to be delivered.

Huge amounts of storage space. Lightning fast processing. Servers all over accepting and delivering information.

None of this happened overnight. But it’s only been in the past few years where these three things passed their tipping point of usefulness and which has made the AI experience that we’re now having feasible. That’s why AI is all over the place. Its slower than expected growth was never a software problem. It’s always been due to hardware limitations. We’ve still got plenty of hardware limitations. But you can expect those limitations to work themselves out in the coming years.